Perhaps the Rum Project is the most intelligent rum website on the net, not least due to our small but extremely competent cadre of posters, moi excepted. Or perhaps not. And it's not like we hate rum here - quite the opposite. Last and as for the reviews here, they are honest in the sense that most rums' scores fall nicely into a credible normal distribution, the well known bell curve. The Project remains entirely independent and free of commercial influence. In the distribution of rum scores you'll find but a few great rums, and likewise just a few really awful ones. Like everything else in life...

Everything tends to roughly centerabout an average, with ever fewer rums on either side of the median. That's life. Still, of the perhaps 200 spirits we've reviewed I can remember only two that were offered to us. So how is it the Artic Wolf gets literally hundreds of freebies, one after another in conveyor belt fashion? It's like the scene in "I Love Lucy" where Lucy and Ethel get jobs at a candy factory, where they are totally inept - especially at wrapping chocolates - due to a speeding conveyor belt that has them stuffing chocolates in their mouths, blouses, and hats. And so it is for the ravenous predator.

Now its not the goal to pick on this reviewer but seeing as how he publishes reviews like Whammo sold hula hoops, it begs our attention. Recently he listed all his rum review scores on a single page, thus saving untold hours and making an analysis easy and possibles. And since this site had not been reviewed for a very long time, it seemed obligatory to determine whether possible bias was perhaps still evident. Or not.

Take a look...

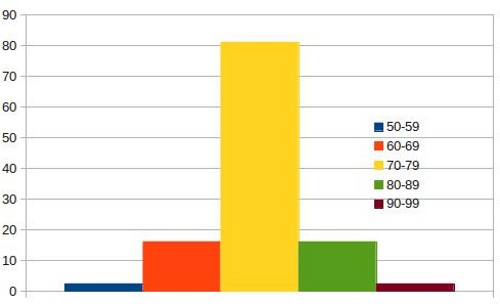

Based on his reviews of 119 "aged" rums, if his scoring was normal, it'd look like this...

. . . . . . .

Yup, the old bell curve we all know and love, especially if we were on the right side of it, lol.

Nice and normal, with scores centered around the median, and ever fewer rums as scores became higher or lower. In the real world this correlates with A-B-C-D-F, 1 to 5 stars, 50-99, or Poor-fair-average-good-great. "Average" is considered to be "C", "3-stars" or "75" as expressed in by far the most common and well understood scales (all based on the Standard American Grading System). So how did this reviewer's scores arrange themselves on the same common scale?

. . . . . . .

.

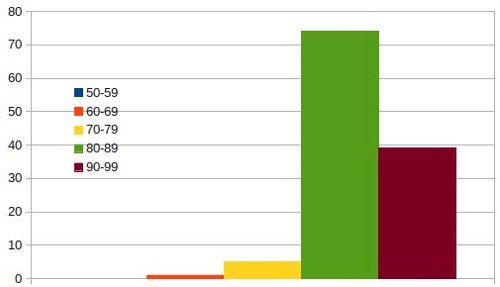

.I'll save you the spreadsheet but if no horrible mistakes were made, the average score is an amazing 87.6, about 13 points higher than most would expect. Worse yet, based on the usual scoring systems, there wasn't a single rum found "poor", only one found "fair" and just a paltry five rums were identified as what most of us understand as "average". At the same time 74 rums fell into the typical "good" range and an absolutely astounding 39 products scored in the stratospheric "great"range of 90-99!

Is it any wonder that he gets freebies? The distributors and distillers know that the Wolf's actual "average" score is close to ninety points and further that 94% of his reviews will fall into one of what most reviewers in the top two categories, "good" or "great", standard 4-star or 5-star categories.

But there's a problem here. These scores are not based on a standard breakdown, but on a very unusual system of the reviewer's own design. Thus, an "88" here will not compare to the the "88" of the BTI, F. Paul Pacult, Ralfy, Robert Parker or that of almost any other reviewers, all of whom rely on a version of the American standard system.

Is this is by design?

You see what this reviewer has done is to invent his own rather curious scoring system. His scores run from 0 to 100 in eleven increasingly smaller ranges (?). Even though this entire range of 0 to 100 is proposed as available for scoring, the lowest reported score I could find for any aged rum was 68.5 (so apparently the scores and ranges from 0 to 68 don't count). Then what are arbitrarily called "mixers" seem assigned to be given scores from 70 to 84. The scoring guide says so. The range of 85 to 89 appears to be a crossover range designated for scoring excellent mixers and possible sippers, while all rums designated as "primarily sippers" get at least a 90.

It's quite a mish mosh and there's really nothing else quite like it on the net. The big curiosity is just why anyone would reject general practice and well established de facto standards that everyone understands.

There no way to really compare this McGuyver'd, duct tape scoring system to what we all know and expect from the usual and customary reviews from most other reviewers. That said my dear friends you may now understand why the actual average score reported by this reviewer is 87.6, and also why the distillers can't seem to send him product fast enough. They know that based on the general understanding and acceptance by the public of the standard ranges (F-A, 1-5 stars, 50-100), that this site's average of 87.6 sounds a whole lot better than "C", "3-stars" or "75" the same rum might get elsewhere.

But fair is fair...

After all, it may just be happenstance that this reviewer's system is so darned high. He may have been completely well intended. So if I may, I've devised a conversion chart to make it easier for monkeys to properly compare scores to those scales used by practically every other reviewer of almost any product:

1-Star: This reviewer's 69-74

2-Star: 75-80

3-Star: 81-87

4-Star: 88-93

5-Star: 94-100

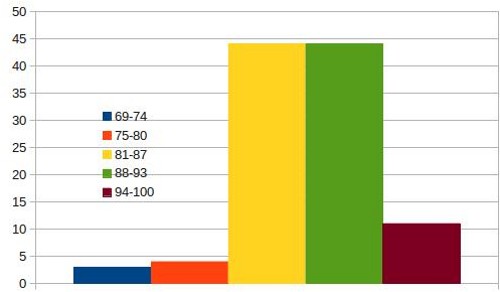

Kudo's to Robert Parker. The only completely unbiased way to reinterpret this reviewer's scores is to use the actual reported scores from his lowest of 69 to 100, and thence to subdivide this actual range into five equally spaced sub-ranges. We can then fairly compare his own actual range and scores and see how or if they are weighted.

By doing so it's then possible to make a fair comparison with the de facto American standard systems that we expect. This reviewer's scores may now be fairly compared to his own actual scores divided into the usual five equal ranges.

That has now been done. Splice the main brace!